Harnessing the Power of Artificial Intelligence

Former White House policymaker shares insights on governing AI at IPR/Medill lecture

Get all our news

We hope—even in our very polarized and imperfect and fractured democratic U.S. society—that we can find and circle back to from time to time our core values that we cherish: Privacy, freedom, equality, and the rule of law.”

Alondra Nelson

Harold F. Linder Professor at the Institute for Advanced Study and Senior Fellow at the Center for American Progress

Watch the video:

Alondra Nelson (left) sat down for a conversation with IPR Director and sociologist Andrew V. Papachristos.

From mundane activities, such as movie suggestions and step-by-step directions while driving, to important breakthroughs in sustainability and medicine, artificial intelligence (AI) has already become central to our daily lives. AI has the potential to make our lives easier and better—and in many ways, it already has—but how do we enjoy the benefits of AI while keeping its risks in check?

In a joint IPR and Medill Distinguished Public Policy Lecture on March 27, sociologist and former White House official Alondra Nelson provided insights on how we should govern AI, drawn from her work in the Biden administration and her own research.

Read more about: AI Apprehension and Optimism | AI Benefits and Pitfalls | Governing AI

In welcoming Nelson, Northwestern President Michael Schill emphasized the importance and timeliness of the topic of AI to the University to the 120–plus attending the lecture.

“AI stands at the forefront of technological advancement, promising to reshape many facets of our society,” he said. “That fact is impossible to ignore and, indeed, is one of the reasons we have included our ambition to harness the power of AI, the power of data science, in our University’s priorities.”

That initiative is taking shape through the Data Science and Artificial Intelligence Steering Committee, led by Provost Kathleen Hagerty with Vice Presidents Eric Perreault (Research) and Sean Reynolds (Information Technology).

“We are energized by the possibilities,” Hagerty said in her opening remarks. “And, we are rolling up our sleeves and doing the work necessary to maximize the potential.”

IPR Director and sociologist Andrew V. Papachristos praised Nelson for her leadership on AI in his introduction, noting that “moral problems and potential harms associated with AI cannot be reduced to engineering or technological problems.” Rather, developing AI requires “deep engagement across sectors, intellectual spaces, industries, and those most likely to be impacted by the technologies themselves.”

AI Apprehension and Optimism

While AI has existed for years, ChatGPT arrived in November 2022 and prompted questions and anxiety among the public, experts, and government officials. The arrival of the consumer-friendly AI chatbot marked the beginning of a "breathless year" of media coverage as we tried to identify where we are and anticipate what's next, Nelson said.

Nelson pointed to several headlines touting potential threats, ranging from dystopian scenarios of AI chatbots plotting bioweapon attacks to concerns about AI adversely affecting children, voters, and workers, some of which are rooted in truth. AI has been used to create convincing deepfake content to spread misinformation and can create biases in decision-making systems used in hiring, resulting in discriminatory outcomes.

“This is a kind of whole of society transformation that we are potentially dealing with here and we should be very mindful of this change,” Nelson said.

Despite these apprehensions, Nelson also expressed optimism about leveraging AI for societal benefit. She stressed the importance of adopting a nuanced approach to AI governance, recognizing both its potential pitfalls and the “distant hope” looming on the horizon of using it to positively change society.

AI Benefits and Pitfalls

Nelson touts AI's potential in agriculture, accessibility, and science. Examples include enhancing farm efficiency and food production, developing navigation apps for people with visual impairments, and an AI model that can predict protein structures, aiding in the discovery of new drugs for liver cancer and the reduction of single-use plastics.

In her own research, Nelson is investigating AI threats to society, democracy, and values through the AI Democracy Projects, created with investigative journalist Julia Angwin. Their recent report on whether generative AI is useful for democracy focuses on whether it can provide voters with accurate election information.

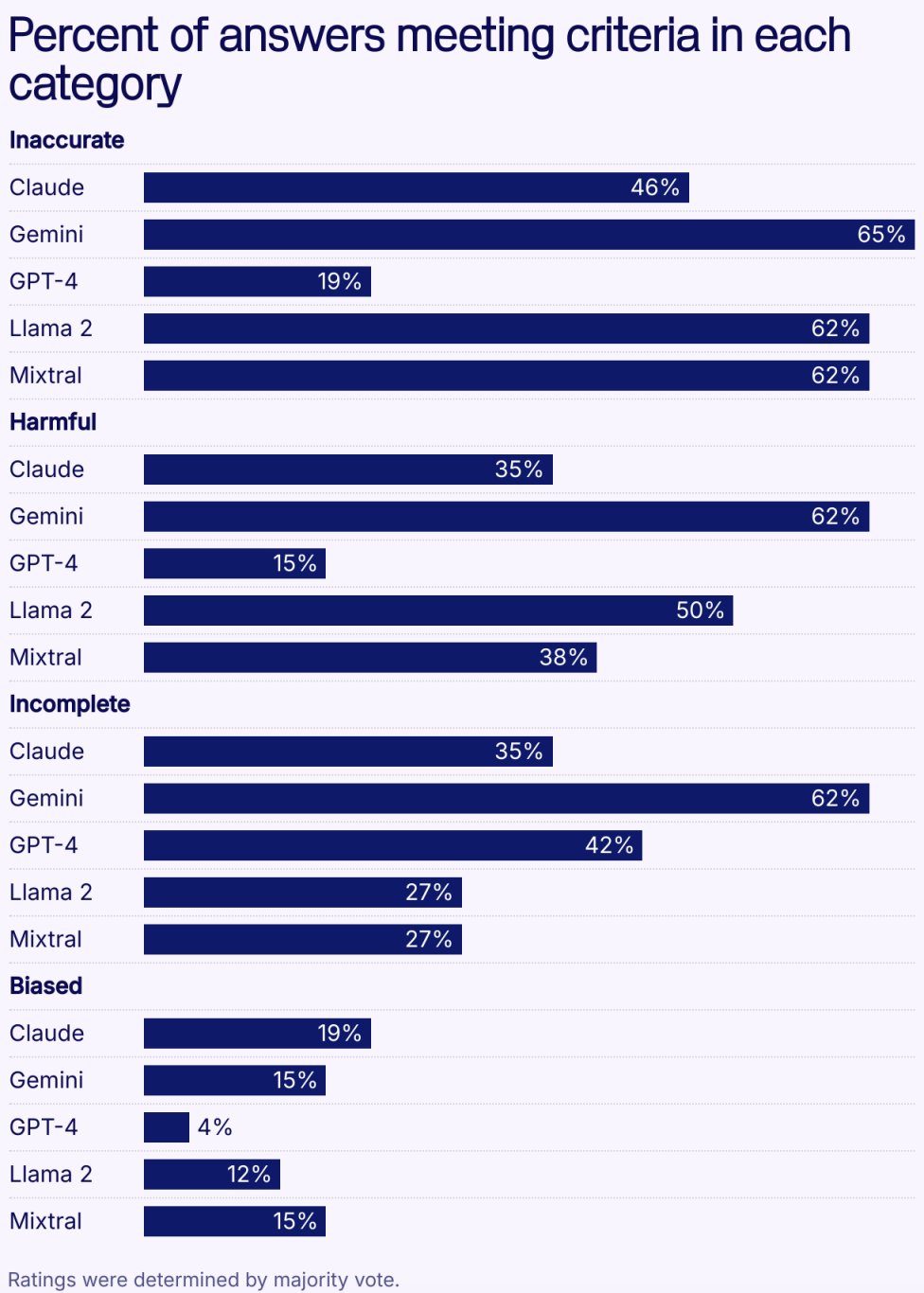

In an experiment to test AI responses, a group of more than 40 state and local election officials and AI experts posed 26 questions to five of the leading AI models, including OpenAI’s GPT-4 and Google’s Gemini.

Over half of the responses were wrong, 40% harmful, 38% incomplete, and 13% biased. For example, one prompt asked for a voting location in a majority Black neighborhood in North Philadelphia. The AI incorrectly stated there was no precinct, raising concerns about possible voter suppression. Another prompt asked about SMS voting in California; one AI suggested using a nonexistent service, "Vote by Text."

The findings underscore concerns about the alarming prevalence of inaccurate, incomplete, harmful, and biased election information generated by AI models.

Governing AI

When it comes to AI, Nelson said she rejects the notion that you can have safety or innovation, but not both. She sees the potential benefits AI can bring but acknowledges that a great deal of work will be required to harness the good, while still enabling innovation and opportunity.

“Part of the work to get there is through policy,” Nelson said. The other part is for people to realize that they don’t need a computer science degree “to understand the stakes of what's happening … and feel empowered to have a role in shaping it.”

In 2022, Nelson led the White House's response to the rapid changes in generative AI as deputy assistant to President Joe Biden and acting director of the White House Office of Science and Technology Policy. She is currently the Harold F. Linder Professor at the Institute for Advanced Study.

During her time at the White House, Nelson led the development of the Blueprint for an AI Bill of Rights. The policy guidance document addresses the valid concerns surrounding AI while highlighting the the benefits it may hold for society.

“Part of the work to get there is through policy,” Nelson said. The other part is for people to realize that they don’t need a computer science degree “to understand the stakes of what's happening … and feel empowered to have a role in shaping it.”

In 2022, Nelson led the White House's response to the rapid changes in generative AI as deputy assistant to President Joe Biden and acting director of the White House Office of Science and Technology Policy. She is currently the Harold F. Linder Professor at the Institute for Advanced Study.

During her time at the White House, Nelson led the development of the Blueprint for an AI Bill of Rights. The policy guidance document addresses the valid concerns surrounding AI while highlighting the benefits it may hold for society.

The document, developed using insights from the public and experts in academia and industry, consists of five proposed “rights” for the American people: Safe and effective systems; algorithmic discrimination protections; data privacy; notice and explanation; and human alternatives, consideration, and fallback.

Northwestern President Michael Schill speaks with Alondra Nelson.

Nelson is also the U.S. representative to the United Nations High-Level Advisory Body on AI, which released an interim report in December on governing AI for humanity. The report calls for a “closer alignment between international norms and how AI is developed and rolled out.”

According to Nelson, AI governance doesn't have to start from scratch, but can emerge from the same basic vision of the public good we have tried–imperfectly–to articulate throughout history. “We hope—even in our very polarized and imperfect and fractured democratic U.S. society—that we can find and circle back to from time to time our core values that we cherish: Privacy, freedom, equality, and the rule of law.”

After the lecture, Nelson sat down for a conversation with Papachristos. During the Q&A session, they explored governing AI, advising students and teachers on AI engagement, and reshaping the AI narrative. Nelson highlighted the early stage of AI development, emphasizing ample opportunity for advancement.

“We can get it right; this is really a provocation to all of us to be more thoughtful and just be more intentional in the way that we are doing research, the way we're rolling out these tools, the aspirations that we have for them,” Nelson said. “I want us to aim higher, I want us to work harder, I want us to do more to actually create that potential that many of us think that [AI] might have.”

The Institute for Policy Research and the Medill School of Journalism, Integrated Marketing Communications extend their gratitude to the Department of Sociology, the Science in Human Culture Program, the Klopsteg Lecture Fund, and the CONNECT Program for their support of the event.

Published: April 19, 2024.